Welcome to today’s post.

In today’s post I will be explaining how to manage output response cache keys within a web application, and how they result in the application performance. I will then give examples on how to manage output cache keys within an ASP.NET Core 7.0 web API application.

In a previous post I explained what output caching is, compared it to response caching, and showed how to improve performance within a web API application by using some of the attributes of the output caching middleware.

The ways in which we can improve output response caching include:

- Caching the content output response frequently used by API requests.

- Ensure content is refreshed periodically and the cache invalidated so that displayed data is current with backend data.

- Evict (invalidate/remove) cache keys that correspond to modified underlying data.

- Ensure cache keys of high use API requests can handle concurrent requests for cache key refreshes.

The caching of output responses is done automatically by the output caching middleware and was explained in the previous post with the use of the [OutputCache] attribute and properties within API controller classes and methods.

The control of periodic cache refreshes is achieved with the use of the Duration property, with shorter cache expiry durations used for content output that would change more frequently. Content that is predominantly static with use a long cache expiry duration.

I will next cover the eviction (invalidation) of output cache keys and show how that is done in an ASP.NET Core web API application.

Management and Control of Output Caching

With output caching, there are limitations on the total storage of cache keys on the web server. By default, the maximum storage size of cache keys is set to 100MB.

Below is an example where we set the maximum storage size to 10MB:

builder.Services.AddOutputCache(options =>

{

options.SizeLimit = 10

});

When the total storage size of cache keys reaches the limit, no further keys can be added to the cache store. It is only after the oldest keys are removed from the cache store can new cache keys be added to the cache. The removal of cache keys from the store is known as key eviction. Keys are automatically evicted from the store to ensure the maximum storage is not exceeded.

For keys to be added to the output cache, the key needs to be specified within the HTTP URL request. Creating an identical Details method and removing the [HttpGet(..)] attribute from the method header will allow the output caching service to add query keys to the output cache from submitted requests. This is down as shown:

[OutputCache(Duration = int.MaxValue, VaryByQueryKeys = new[] { "id" })]

public async Task<ActionResult> Details2(int id)

{

…

}

We have seen how keys are added to the output cache through an HTTP request attribute. In the next section, I will show how to remove (or evict) keys from the output cache using tagging.

Using Tagging to Remove Cache Keys

In addition to automatic removal of cache keys, we can also manually remove cache keys from storage. This is done using the EvictByTagAsync() method of the IOutputCacheStore service.

One common way to remove cache keys is to group the endpoints by a tag. We can first set the tag for the above API method as shown in the application startup configuration:

builder.Services.AddOutputCache(options =>

{

options.AddBasePolicy(builder => builder

.With(c => c.HttpContext.Request.Path.StartsWithSegments("/api/Book"))

.Tag("tag-book "));

options.AddBasePolicy(builder => builder

.With(c => c.HttpContext.Request.Path.StartsWithSegments("/api/Book/Details2"))

.Tag("tag-book-details"));

…

});

An example of how to evict output cache keys is done by first executing some API requests on matching URL requests.

The new method, Details2() will now accept HTTP Get requests of this type with the id query string parameter:

http://localhost:25138/api/Book/Details2/?id=1

When the above request is not in the cache, the following request log will show:

Request started: "GET" http://localhost:25138/api/Book/Details2/?id=1

[23:11:33 INF] Executed DbCommand (1,408ms) [Parameters=[@__id_0='?' (DbType = Int32)], CommandType='Text', CommandTimeout='30']

SELECT TOP(2) [b].[ID], [b].[Author], [b].[DateCreated], [b].[DateUpdated], [b].[Edition], [b].[Genre], [b].[ISBN], [b].[Location], [b].[MediaType], [b].[Title], [b].[YearPublished]

FROM [Books] AS [b]

WHERE [b].[ID] = @__id_0

Response sent: http://localhost:25138/api/Book/Details2/?id=1 with HTTP status 200.0

[23:11:36 INF] HTTP GET /api/Book/Details2/ responded 200 in 29204.6768 ms

The first item to be cached will take longer than subsequent requests to process as it is checked against the cache, then added to the cache and tagged if the URL segment pattern matches.

If we submit an additional request:

http://localhost:25138/api/Book/Details2/?id=2

and debug the output cache instance within our controller as shown:

public BookController(ApplicationDbContext db,

ILogger<BookController> logger, ..

IOutputCacheStore outputCache, ..

)

{

_db = db;

_logger = logger;

..

_outputCache = outputCache; // <--- set breakpoint here.

..

}

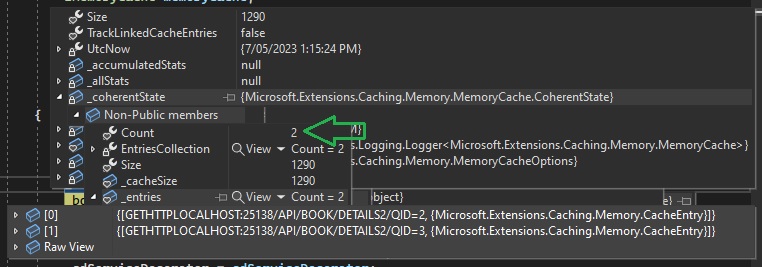

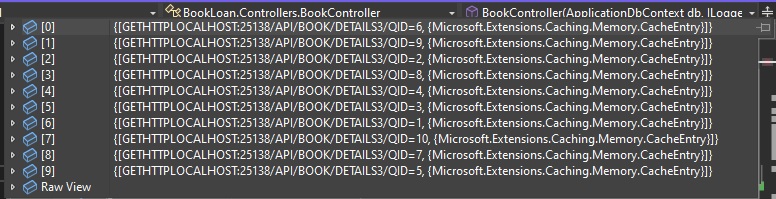

When the breakpoint is hit on the indicated line, we will see the number of entries of the cached keys increase in the output cache coherent state internal property:

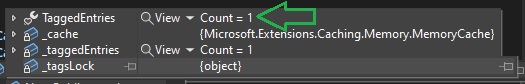

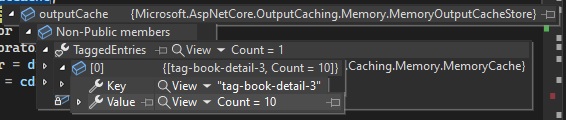

In addition, the number of tagged entries that matches the number of methods with matching URL segments shows in the instance property, TaggedEntries as shown:

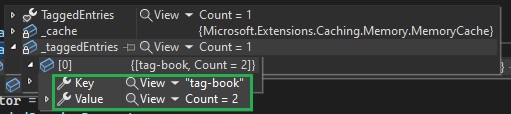

Each tagged entry in the TaggedEntries property consists of key-value pairs:

To evict the keys from the output cache, we can run the purge API request using the API method as shown:

[HttpGet("api/[controller]/purge/{tag}")]

public async Task Action Purge(IOutputCacheStore cache, string tag)

{

await cache.EvictByTagAsync(tag, default);

}

To evict specific keys grouped by the tag, tag-book-details from the output cache, we can run the purge API request as shown:

http://localhost:25138/api/Book/purge/tag-book-details

Debug console output is shown below:

Request started: "GET" http://localhost:25138/api/Book/purge/tag-book-details

[23:53:59 INF] HTTP GET /api/Book/purge/tag-book responded 200 in 530.6432 ms

Response sent: http://localhost:25138/api/Book/purge/tag-book-details with HTTP status 200.0

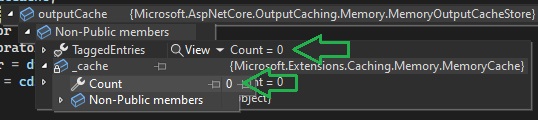

In the debugger we can see the TaggedEntries property is reset back to zero, and the cache count reset back to zero as shown:

When the keys are removed from the cache, each new request submitted with one of the evicted keys will call the controller method and re-insert the evicted key from the request. Keys submitted with cached keys will bypass the controller method and return the cached output response.

In the next section, I will show how excessive requests that can cause concurrency issues within an API method can be handled and controlled by locking or unlocking the cache keys.

Ensuring Response Caching Handles Concurrent Requests

When multiple clients dispatch requests to the same web API method, the risk of different threads from each client request causing the request to be blocked or rejected by the response caching middleware is quite high. When the cache key for the API is already evicted (invalidated) and is ready to be refreshed by the middleware, when multiple requests call the same API method, they will attempt to repopulate the cache key. This concurrent access to the cache keys can cause a race condition, so many of the requests will be rejected to avoid data corruption of the cache resource. When many concurrent requests attempt to refresh and populate the cache at the same time, this is known as a cache stampede.

With the response output caching middleware, the issue with concurrent requests and cache stampedes is handled by default by utilizing resource locking. What resource locking does is to ensure that whenever there are many concurrent requests accessing an invalidated cache key, only the first request is permitted to update and refresh the cache key. The subsequent requests are forced to wait in a queue until the first request unlocks access to the cache key. After unlocking, the next request is permitted to apply its cache refresh to the cache key collection. This guarantees the integrity of the in-memory cache keys.

Situations where request concurrency is not required for an API method would include content that almost never changes and has a lengthy cache expiry duration. In this case we can unlock the resource for the API method as we are not concerned about responses being rejected by the server middleware.

Ensuring cache keys of high use API requests can handle concurrent requests for cache key refreshes is available by default in the output caching policy.

I will cover two scenarios of resource locking and unlocking with single and multiple requests and compare the outputs. Removing (unlocking) the concurrent handling of concurrent requests for cache key refreshes is done by using the SetLocking() output caching policy method as shown:

builder.Services.AddOutputCache(options =>

{

...

options.AddPolicy("NoLockBookDetails", builder => builder.SetLocking(false));

});

Applying the above policy to the API method done as shown:

[OutputCache(PolicyName = "NoLockBookDetails",

Duration = int.MaxValue,

VaryByQueryKeys = new[] { "id" })

]

public async Task<ActionResult> Details3(int id)

{

return await Details(id);

}

The first experiment below covers unlocked output cache key resources with one request thread:

Experiment: Single thread excessive requests with default resource locking

The default locking of output requests can be demonstrated with the following commands submitted to a web API service from a single thread.

Sent request:

curl http://localhost:25138/api/Book/Details3/?id=1 &

curl http://localhost:25138/api/Book/Details3/?id=2 &

curl http://localhost:25138/api/Book/Details3/?id=3 &

curl http://localhost:25138/api/Book/Details3/?id=4 &

curl http://localhost:25138/api/Book/Details3/?id=5

Log output:

Request started: "GET" http://localhost:25138/api/Book/Details3/?id=1

Response sent: http://localhost:25138/api/Book/Details3/?id=1 with HTTP status 200.0

[00:53:18 INF] HTTP GET /api/Book/Details3/ responded 200 in 4003.1217 ms

Request started: "GET" http://localhost:25138/api/Book/Details3/?id=2

Response sent: http://localhost:25138/api/Book/Details3/?id=2 with HTTP status 200.0

[00:53:19 INF] HTTP GET /api/Book/Details3/ responded 200 in 149.3333 ms

Request started: "GET" http://localhost:25138/api/Book/Details3/?id=3

Response sent: http://localhost:25138/api/Book/Details3/?id=3 with HTTP status 200.0

[00:53:20 INF] HTTP GET /api/Book/Details3/ responded 200 in 602.9932 ms

Request started: "GET" http://localhost:25138/api/Book/Details3/?id=4

Response sent: http://localhost:25138/api/Book/Details3/?id=4 with HTTP status 200.0

[00:53:20 INF] HTTP GET /api/Book/Details3/ responded 200 in 12.6429 ms

Request started: "GET" http://localhost:25138/api/Book/Details3/?id=5

Response sent: http://localhost:25138/api/Book/Details3/?id=5 with HTTP status 200.0

[00:53:25 INF] HTTP GET /api/Book/Details3/ responded 200 in 4335.0005 ms

We can see from the log output that there are no concurrency or response rejections, with the output being un-cached taking longer to regenerate (>50ms). Responses that were quick were for no content.

The second experiment below covers the default locked output cache key resources (by removing the line containing the policy configuration builder.SetLocking(false) from the startup):

Experiment: Multiple thread excessive requests with no resource locking

In the following experiment, I submit many requests concurrently to the API method without applying any locking to the API method.

The commands are shown below:

Command 1:

curl http://localhost:25138/api/Book/Details3/?id=1 &

curl http://localhost:25138/api/Book/Details3/?id=2 &

curl http://localhost:25138/api/Book/Details3/?id=3 &

curl http://localhost:25138/api/Book/Details3/?id=4 &

curl http://localhost:25138/api/Book/Details3/?id=5

Command 2:

curl http://localhost:25138/api/Book/Details3/?id=1 &

curl http://localhost:25138/api/Book/Details3/?id=2 &

curl http://localhost:25138/api/Book/Details3/?id=3 &

curl http://localhost:25138/api/Book/Details3/?id=4 &

curl http://localhost:25138/api/Book/Details3/?id=5

The log output from the above requests is shown below:

Request started: "GET" http://localhost:25138/api/Book/Details3/?id=1

Response sent: http://localhost:25138/api/Book/Details3/?id=1 with HTTP status 200.0

[01:04:36 INF] HTTP GET /api/Book/Details3/ responded 200 in 62.9821 ms

Request started: "GET" http://localhost:25138/api/Book/Details3/?id=2

Response sent: http://localhost:25138/api/Book/Details3/?id=2 with HTTP status 200.0

[01:04:37 INF] HTTP GET /api/Book/Details3/ responded 200 in 16.3333 ms

Request started: "GET" http://localhost:25138/api/Book/Details3/?id=1

Request started: "GET" http://localhost:25138/api/Book/Details3/?id=3

Response sent: http://localhost:25138/api/Book/Details3/?id=3 with HTTP status 429.0

[01:04:37 WRN] OnRejected: User Anonymous endpoint:/api/Book/Details3/ 127.0.0.1

[01:04:37 INF] HTTP GET /api/Book/Details3/ responded 429 in 41.2779 ms

Request started: "GET" http://localhost:25138/api/Book/Details3/?id=4

Response sent: http://localhost:25138/api/Book/Details3/?id=4 with HTTP status 429.0

[01:04:39 WRN] OnRejected: User Anonymous endpoint:/api/Book/Details3/ 127.0.0.1

[01:04:39 INF] HTTP GET /api/Book/Details3/ responded 429 in 1.1022 ms

Request started: "GET" http://localhost:25138/api/Book/Details3/?id=5

Response sent: http://localhost:25138/api/Book/Details3/?id=5 with HTTP status 429.0

[01:04:39 WRN] OnRejected: User Anonymous endpoint:/api/Book/Details3/ 127.0.0.1

[01:04:39 INF] HTTP GET /api/Book/Details3/ responded 429 in 0.3482 ms

Request started: "GET" http://localhost:25138/api/Book/Details3/?id=5

Response sent: http://localhost:25138/api/Book/Details3/?id=5 with HTTP status 429.0

[01:04:39 WRN] OnRejected: User Anonymous endpoint:/api/Book/Details3/ 127.0.0.1

[01:04:39 INF] HTTP GET /api/Book/Details3/ responded 429 in 0.3482 ms

Response sent: http://localhost:25138/api/Book/Details3/?id=1 with HTTP status 200.0

[01:04:41 INF] HTTP GET /api/Book/Details3/ responded 200 in 3266.3173 ms

Request started: "GET" http://localhost:25138/api/Book/Details3/?id=2

[01:04:41 INF] HTTP GET /api/Book/Details3/ responded 200 in 41.8327 ms

Response sent: http://localhost:25138/api/Book/Details3/?id=2 with HTTP status 200.0

[01:04:46 INF] HTTP GET /api/Book/Details3/ responded 200 in 4966.9963 ms

Request started: "GET" http://localhost:25138/api/Book/Details3/?id=3

Response sent: http://localhost:25138/api/Book/Details3/?id=3 with HTTP status 200.0

[01:04:46 INF] HTTP GET /api/Book/Details3/ responded 200 in 30.7588 ms

Request started: "GET" http://localhost:25138/api/Book/Details3/?id=4

Response sent: http://localhost:25138/api/Book/Details3/?id=4 with HTTP status 200.0

[01:04:51 INF] HTTP GET /api/Book/Details3/ responded 200 in 4924.7395 ms

Request started: "GET" http://localhost:25138/api/Book/Details3/?id=5

Response sent: http://localhost:25138/api/Book/Details3/?id=5 with HTTP status 200.0

[01:04:51 INF] HTTP GET /api/Book/Details3/ responded 200 in 13.3396 ms

We can see from the log output that there were four URI requests with response output rejections (HTTP status 429) were the shortest duration return responses. The remaining URI requests with successful response output (HTTP status 200) were successfully added as output cache keys with regenerated output responses. The successful responses had the longest output response durations.

The third experiment below covers the default locked output cache key resources with two request threads:

Experiment: Multiple threads with excessive cached requests

The following experiment involves submitting multiple concurrent commands to the API method that has output caching locked:

Send:

Command 1:

curl http://localhost:25138/api/Book/Details2/?id=1 &

curl http://localhost:25138/api/Book/Details2/?id=2 &

curl http://localhost:25138/api/Book/Details2/?id=3 &

curl http://localhost:25138/api/Book/Details2/?id=4 &

curl http://localhost:25138/api/Book/Details2/?id=5

Command 2:

curl http://localhost:25138/api/Book/Details2/?id=1 &

curl http://localhost:25138/api/Book/Details2/?id=2 &

curl http://localhost:25138/api/Book/Details2/?id=3 &

curl http://localhost:25138/api/Book/Details2/?id=4 &

curl http://localhost:25138/api/Book/Details2/?id=5

Log output:

Request started: "GET" http://localhost:25138/api/Book/Details2/?id=1

Response sent: http://localhost:25138/api/Book/Details2/?id=1 with HTTP status 200.0

[01:57:11 INF] HTTP GET /api/Book/Details2/ responded 200 in 294.3003 ms

Response sent: http://localhost:25138/api/Book/Details2/?id=1 with HTTP status 200.0

Request started: "GET" http://localhost:25138/api/Book/Details2/?id=2

Response sent: http://localhost:25138/api/Book/Details2/?id=2 with HTTP status 200.0

[01:57:12 INF] HTTP GET /api/Book/Details2/ responded 200 in 21.6123 ms

Request started: "GET" http://localhost:25138/api/Book/Details2/?id=3

Request started: "GET" http://localhost:25138/api/Book/Details2/?id=1

Response sent: http://localhost:25138/api/Book/Details2/?id=1 with HTTP status 200.0

[01:57:13 INF] HTTP GET /api/Book/Details2/ responded 200 in 17.4843 ms

Request started: "GET" http://localhost:25138/api/Book/Details2/?id=2

Response sent: http://localhost:25138/api/Book/Details2/?id=2 with HTTP status 200.0

[01:57:14 INF] HTTP GET /api/Book/Details2/ responded 200 in 6.1680 ms

Request started: "GET" http://localhost:25138/api/Book/Details2/?id=3

Response sent: http://localhost:25138/api/Book/Details2/?id=3 with HTTP status 200.0

[01:57:16 INF] HTTP GET /api/Book/Details2/ responded 200 in 3491.1638 ms

[01:57:16 INF] HTTP GET /api/Book/Details2/ responded 200 in 1378.8028 ms

Request started: "GET" http://localhost:25138/api/Book/Details2/?id=4

Response sent: http://localhost:25138/api/Book/Details2/?id=4 with HTTP status 200.0

[01:57:16 INF] HTTP GET /api/Book/Details2/ responded 200 in 12.7968 ms

[01:57:16 INF] HTTP GET /api/Book/Details2/ responded 200 in 18.0066 ms

Request started: "GET" http://localhost:25138/api/Book/Details2/?id=5

Response sent: http://localhost:25138/api/Book/Details2/?id=5 with HTTP status 200.0

[01:57:21 INF] HTTP GET /api/Book/Details2/ responded 200 in 3907.7059 ms

[01:57:21 INF] HTTP GET /api/Book/Details2/ responded 200 in 3482.6537 ms

We can see from the log output that all URI requests with successful response output (HTTP status 200) were successfully added as output cache keys with regenerated output responses. Requests that had no responses were already added to the output cache and so bypassed the API method.

Following a run of a command with 10 API URI requests, a typical cache output key collection in-memory entries would look like the following:

Similarly, there would be 10 tagged output cache keys as shown:

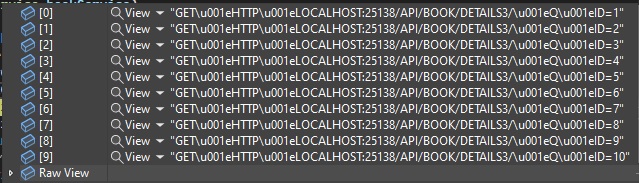

The 10 tagged output cache keys are shown in the collection below:

The above discussion and observations show us how to manage the response output cache keys in ASP.Net Core 7.0. I showed how to manage cache storage, include query keys to control caching within API methods, then manage cache keys including the eviction (removal) of keys by referring to tagged groups of cache keys. Unlike response caching middleware, where we could not manually evict (remove) keys from the cache, which is in either the client or server headers, the output cache middleware allows more control of key removal.

I then showed how the default locking of output response cache keys benefits the caching of keys for multiple concurrent requests, avoiding the known cache stampede issue. I demonstrated an example of how concurrent request threads with unlocked cache key server resources can causing a cache stampede with rejected response output.

That is all for today’s post.

I hope you have found this post useful and informative.

Andrew Halil is a blogger, author and software developer with expertise of many areas in the information technology industry including full-stack web and native cloud based development, test driven development and Devops.