Welcome to today’s post.

In today’s post I will be discussing how we can use Azure Functions to help us build serverless workflows.

One of the key properties of Azure Functions is that they are stateless and do not persist any data between calls. In this post, I will be showing how to use Azure Functions that have data persistence between calls. This type of Azure Function is known as a Durable Function.

What Exactly are Durable Azure Functions?

Durable Azure functions are serverless functions that have two additional important qualities that normal stateless serverless functions do not have:

- State persistence

- Long running tasks

The key building blocks of serverless workflows can be achieved by combining one or more durable Azure functions. Each durable function is an activity within a workflow. With additional programming logic we can control the flow of the workflow and decide which subsequent activities we will execute. Each durable function activity returns output which can then be used to determine whether the workflow will end with a result or continue and execute further activities within the workflow.

A Typical Scenario for Azure Durable Functions

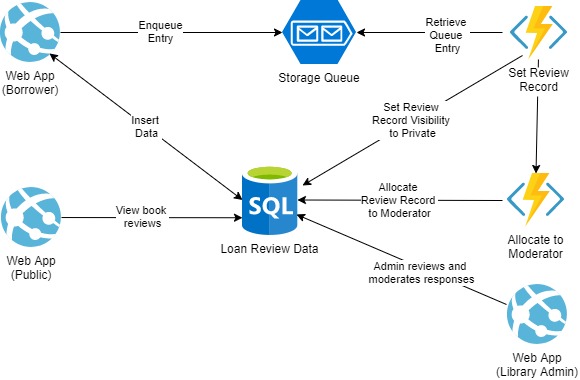

An example scenario of a serverless workflow would be a library application that provided borrowers with the ability to review books they had borrowed. The review record data would then be posted into an Azure storage queue, which would then be picked up by an Azure orchestration function, which would set the visibility status of the review record, then it would the allocate the review record to a reviewer (approver) using another Azure orchestration function. As we can see here the Azure orchestration is a chain of serverless functions which set the state of the record, then allocate the record to an approver, who would then decide whether to display the review, moderate the comment, or have the record removed.

As we can see, the orchestration has the power to be able to construct sufficiently complex workflows which can be beneficial for any business scenario.

Creation of a Durable Function in Visual Studio

Creating and implementing a durable Azure Orchestration is done as follows:

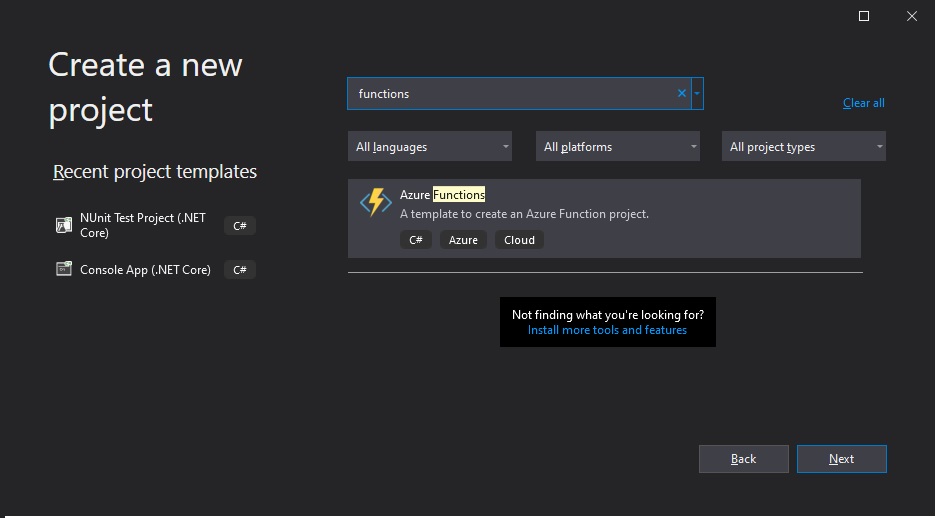

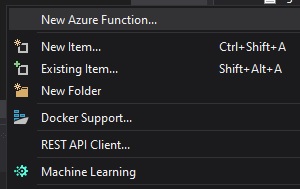

First create a new Azure functions project:

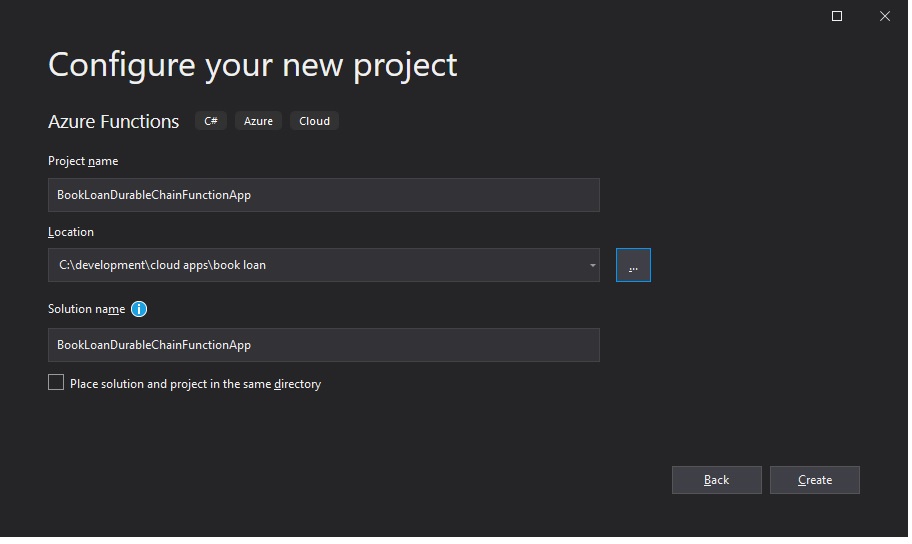

Configure the project as shown:

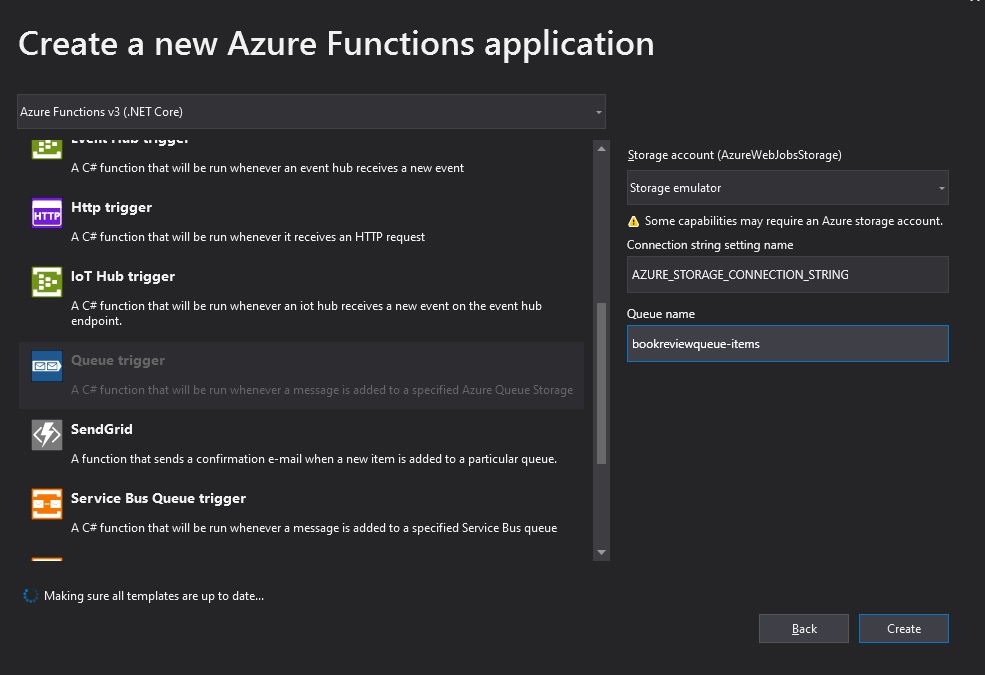

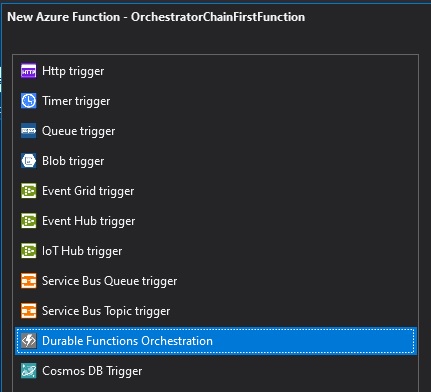

Select the function application type.

We can either create the app as an empty application or with one of the existing trigger types. These can include Http, IoT Hib, Service Bus, Storage queue.

We select the Queue trigger function application:

After this, we create a new Azure function:

We make the Azure function a Durable Function Orchestration:

The resulting function app can be run in its default state.

The local.settings.JSON configuration will contain the following key to default storage to the emulator:

"AzureWebJobsStorage": "UseDevelopmentStorage=true",

In the next section, I will show how to run the durable function within a local development environment.

Running the Durable Function in a Local Development Environment

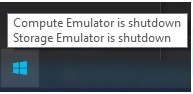

Before we can run it within the local environment, we will need to enable the two following components in our environment:

- Azure Compute Emulator

- Azure Storage Emulator

In a previous post I showed how to install the Azure Storage and Compute Emulator components in a local development environment.

Before we can start the function application, we can access the emulator in the task bar icon which displays the current running state:

Start up the emulators as shown:

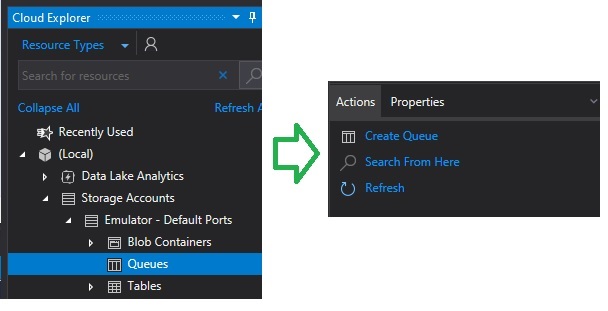

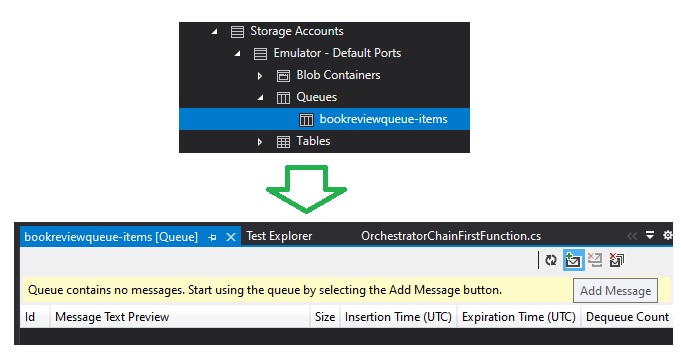

Next, in our local storage emulator open the queue and create a new queue.

Once the queue is created, we then open the queue and in the queue list window create a new message:

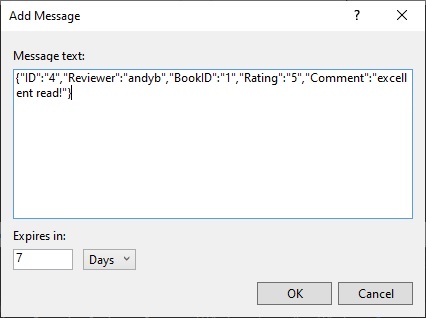

We then add a new message in JSON format as shown:

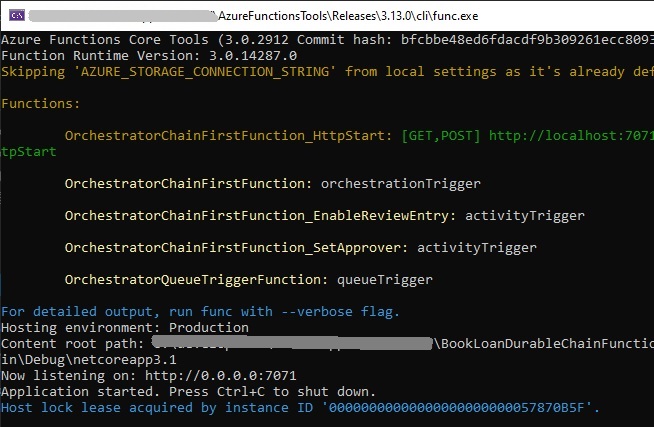

Next, run the function application. After running the console will open and display the names of the queue trigger function, orchestration trigger and custom orchestration activity functions:

When we use a storage emulator the connection to our local storage emulator looks as shown:

"AccountName=devstoreaccount1;

AccountKey=xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

DefaultEndpointsProtocol=http;

BlobEndpoint=http://127.0.0.1:10000/devstoreaccount1;

QueueEndpoint=http://127.0.0.1:10001/devstoreaccount1;

TableEndpoint=http://127.0.0.1:10002/devstoreaccount1;",

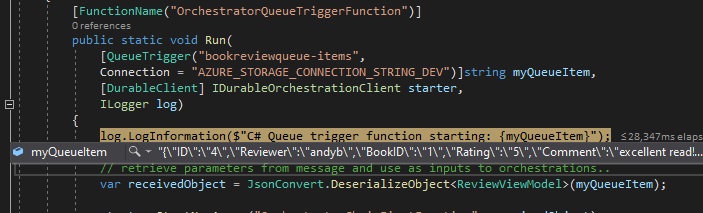

If we run the Azure function app in the debugger with breakpoints in each function, the first function we can inspect is the queue trigger function body. In the queue trigger will bind the posted message queue item into the leading string parameter.

The Azure queue trigger function is shown below:

using Microsoft.Azure.WebJobs;

using Microsoft.Azure.WebJobs.Host;

using Microsoft.Extensions.Logging;

using Microsoft.Azure.WebJobs.Extensions.DurableTask;

using Newtonsoft.Json;

namespace BookLoanDurableChainFunctionApp

{

public static class OrchestratorQueueTriggerFunction

{

[FunctionName("OrchestratorQueueTriggerFunction")]

public static void Run(

[QueueTrigger("bookreviewqueue-items",

Connection = "AZURE_STORAGE_CONNECTION_STRING_DEV")]string myQueueItem,

[DurableClient] IDurableOrchestrationClient starter,

ILogger log)

{

log.LogInformation($"C# Queue trigger function starting: {myQueueItem}");

var receivedObject = JsonConvert.DeserializeObject<ReviewViewModel>(myQueueItem);

starter.StartNewAsync("OrchestratorChainFirstFunction", receivedObject);

log.LogInformation($"C# Queue trigger function processed: {myQueueItem}");

}

}

}

In the next section, I will show how we debug a chain of durable functions from an orchestration client.

Running a Durable Orchestration Client

To be able to start an orchestration client and make use of the orchestration client API we will also need to declare a parameter of type IDurableOrchestrationClient in our trigger function.

To make a call to our orchestration trigger function with structured data, we do to the following:

- Deserialize the message data.

- Call the orchestration API method StartNewAsync().

We do this as shown:

var receivedObject = JsonConvert.DeserializeObject<ReviewViewModel>(myQueueItem);

starter.StartNewAsync("OrchestratorChainFirstFunction", receivedObject);

Our class that stores the definition of the message structure is defined as shown:

public class ReviewViewModel

{

public int ID { get; set; }

public string Reviewer { get; set; }

public string Author { get; set; }

public string Title { get; set; }

public string Heading { get; set; }

public string Comment { get; set; }

public int Rating { get; set; }

…

public int BookID { get; set; }

public bool IsVisible { get; set; }

public string Approver { get; set; }

…

}

Within our orchestration trigger method, we pass a parameter of type IDurableOrchestrationContext which allows us to obtain the input object and make calls to other orchestration activities.

The API method we will use to make orchestration activity calls is:

CallActivityAsync(string functionName, object input)

Where:

functionName is the name of the orchestration function we wish to call.

Input is the class object we wish to pass as an input parameter to the orchestration function. Below is our orchestration trigger function:

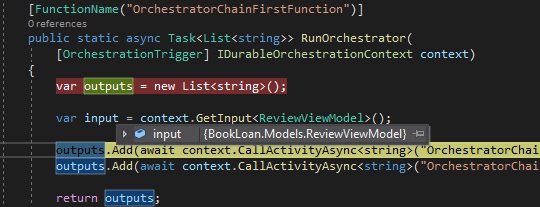

Below is our orchestration trigger function:

using Microsoft.Azure.WebJobs;

using Microsoft.Azure.WebJobs.Extensions.DurableTask;

using Microsoft.Extensions.Logging;

namespace BookLoanDurableChainFunctionApp

{

public static class OrchestratorChainFirstFunction

{

[FunctionName("OrchestratorChainFirstFunction")]

public static async Task<List<string>> RunOrchestrator(

[OrchestrationTrigger] IDurableOrchestrationContext context)

{

var outputs = new List<string>();

var input = context.GetInput<ReviewViewModel>();

outputs.Add(await context.CallActivityAsync<string>("OrchestratorChainFirstFunction_SetApprover", input));

outputs.Add(await context.CallActivityAsync<string>("OrchestratorChainFirstFunction_EnableReviewEntry", input));

return outputs;

}

The two calls we make to our activities are a chained function pattern which is running serverless functions sequentially. In other types of workflow orchestrations, we can run functions in parallel, as a human interaction, or in waiting states.

await context.CallActivityAsync<string>("OrchestratorChainFirstFunction_SetApprover", input);

await context.CallActivityAsync<string>("OrchestratorChainFirstFunction_EnableReviewEntry", input);

When our orchestration activities are called, the input can then be processed and used to execute more detailed business processes such as calling API services, send email notifications, or database operations.

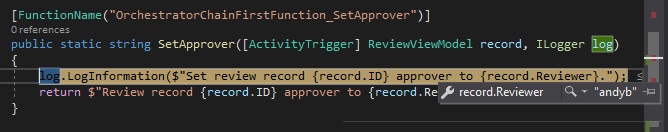

Below are examples of two approver functions:

The orchestration activity functions are shown below:

[FunctionName("OrchestratorChainFirstFunction_SetApprover")]

public static string SetApprover([ActivityTrigger] ReviewViewModel record,

ILogger log)

{

log.LogInformation($"Set review record {record.ID} approver to {record.Reviewer}.");

return $"Review record {record.ID} approver to {record.Reviewer}!";

}

and

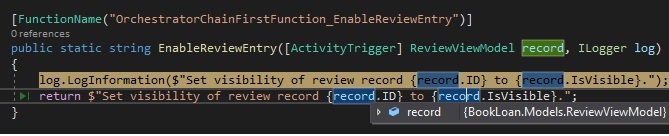

[FunctionName("OrchestratorChainFirstFunction_EnableReviewEntry")]

public static string EnableReviewEntry([ActivityTrigger] ReviewViewModel record,

ILogger log)

{

log.LogInformation($"Set visibility of review record {record.ID} to {record.IsVisible}.");

return $"Set visibility of review record {record.ID} to {record.IsVisible}.";

}

If we get the following error during debugging of our Azure functions:

An unhandled exception has occurred. Host is shutting down.

Microsoft.Azure.WebJobs.Extensions.Storage: The operation 'GetMessages' with

id 'e8f155d0-c2dc-47f7-bf82-624fc110ee4b' did not complete in '00:02:00'.

It means that our durable function default timeout has been exceeded. If this occurs, then close the console and restart the app to resume debugging.

If we wish to extend the timeout to beyond 2 minutes then we can set the following key in host.json with a higher timeout:

"functionTimeout": "00:05:00"

Be aware that the consumption plan has a limit of 10 minutes. If we require greater than 10 minutes or even indefinite timeouts, then we will have to upgrade our plan to Premium or higher.

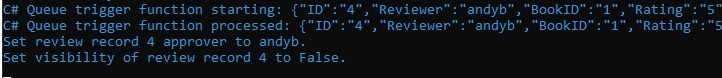

After the orchestration is executed, the output shows us that the orchestration activities and input has been received successfully.

The next step will be to use dependency injection in our orchestration application to allow us to flexibly make use of additional services (as we mentioned above) to give our workflow orchestration more power to achieve our application and business requirements. The use of dependency injection I will defer to a later post.

The above should have given you more insight into what an Azure durable orchestration is capable of and ideas of how to extend its use for other function trigger types.

That’s all for today’s post.

I hope you found this post useful and informative.

Andrew Halil is a blogger, author and software developer with expertise of many areas in the information technology industry including full-stack web and native cloud based development, test driven development and Devops.