Welcome to today’s post.

In today’s post I will discuss how we can apply batching with Entity Framework Core.

With batching we can apply more than one INSERT or UPDATE operation to an SQL table with one command. What this means is a command is sent on one trip to SQL database, the operations are applied against the table, then a response is provided to the calling client. Being able to issue one command and response is the most efficient way we can apply the command from our client or API method.

In the first section I will show how to batch operations with data inserts, then later apply batch operations with inserts and updates.

Insert Batching Operations

I will first show how this works for an INSERT batching operation.

Suppose I have a JSON data file that consists of records that I would like to bulk insert into my Books database:

[

{

"title": "Test Book 1",

"author": "Test1, Author",

"yearpublished": 1814,

"edition": "Penguin",

"genre": "Fiction",

"isbn": "1",

"mediatype": "book",

"location": "sydney"

},

…

{

"title": "Test Book 20",

"author": "Test20, Author",

"yearpublished": 1993,

"edition": "Penguin",

"genre": "Fiction",

"isbn": "20",

"mediatype": "book",

"location": "sydney"

}

]

What I have done is to format the data as a JSON array, with the JSON objects enclosed within brackets [ … ].

I then implement an API controller that when run, will receive the above JSON array as an input, then call a service method to batch insert the JSON records into a Books table.

The API controller method is shown below:

[HttpPost("api/[controller]/BulkInsertBooks")]

public async Task<ActionResult> BulkInsertBooks(

[FromBody] List<BookViewModel> model)

{

try

{

await _bookService.BulkInsertBooks(model);

return Ok();

}

catch (Exception ex)

{

if (ex.InnerException != null)

return BadRequest(

$"Cannot insert book records: {ex.Message},

inner exception: {ex.InnerException.Message}"

);

return BadRequest($"Cannot insert book records: {ex.Message}");

}

}

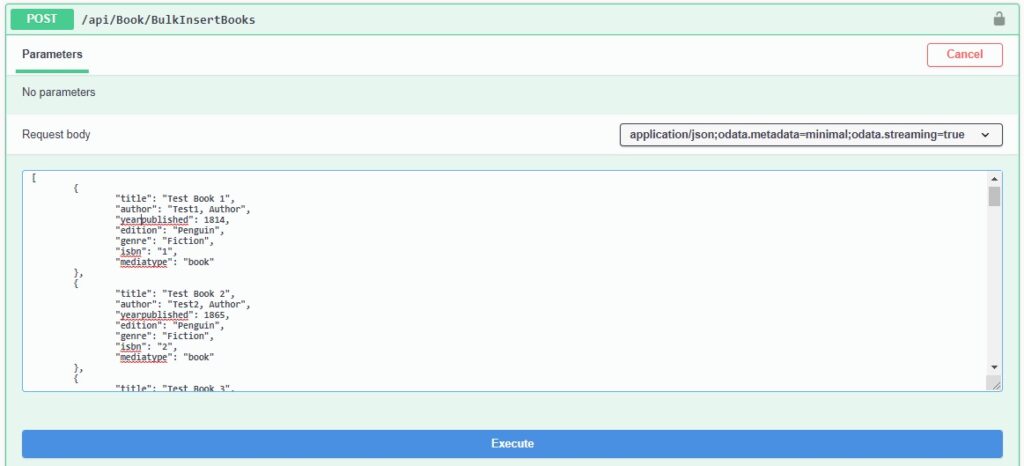

The API request is an HTTP POST method:

http://localhost:25138/api/Book/BulkInsertBooks

The JSON array request body payload is bound and converted to the input parameter, which is a list collection:

[FromBody] List<BookViewModel>

The service method BulkInsertBooks() takes the converted list of book objects, iterates the list, then appends each book object as a record into the current data context. Finally, the appended records are committed to the data context.

The method is shown below:

public async Task BulkInsertBooks(List<BookViewModel> books)

{

foreach (var book in books)

{

book.DateCreated = DateTime.Now;

book.DateUpdated = DateTime.Now;

await _bookRepository.AddAsync(book);

}

await _bookRepository.UnitOfWork.SaveChangesAsync();

}

As we can see, I have updated the DateCreated and DateUpdated fields of each object before appending the object to the data context.

To manually test this bulk insertion, I can either submit the API request using a tool like POSTMAN or with the Swagger interface as follows:

If we leave one of the required fields in the input data that is declared as Required from our POCO model:

public class BookViewModel

{

public int ID { get; set; }

[Required]

public string Title { get; set; }

[Required]

public string Author { get; set; }

public int YearPublished { get; set; }

public string Genre { get; set; }

public string Edition { get; set; }

public string ISBN { get; set; }

[Required]

public string Location { get; set; }

[Required]

public string MediaType { get; set; }

public DateTime DateCreated { get; set; }

public DateTime DateUpdated { get; set; }

public BookViewModel() { }

}

Then the following error exception will be thrown:

Cannot insert book records: An error occurred while updating the entries. See the inner exception for details., inner exception: Cannot insert the value NULL into column 'Location', table 'aspnet-BookCatalog.dbo.Books'; column does not allow nulls. UPDATE fails. The statement has been terminated.

With any bulk insertion methods, we should apply validations to each record to ensure the data is consistent with the application POCO models and any other related business rules. If a validation fails, then this should ideally be returned to the client as an error in the response object.

When the bulk insert is successful, the following console log output will display:

Microsoft.AspNetCore.Hosting.Diagnostics: Information: Request starting HTTP/1.1 POST http://localhost:25138/api/Book/BulkInsertBooks application/json;odata.metadata=minimal;odata.streaming=true 3244

…

BulkInsertBooks(System.Collections.Generic.List`1[BookLoan.Models.BookViewModel]) on controller BookLoan.Controllers.BookController (BookLoan.Catalog.API).

Microsoft.EntityFrameworkCore.Infrastructure: Information: Entity Framework Core 3.1.0 initialized 'ApplicationDbContext' using provider 'Microsoft.EntityFrameworkCore.SqlServer' with options: None

Microsoft.EntityFrameworkCore.Database.Command: Information: Executed DbCommand (177ms)

[Parameters=[

@p0='?' (Size = 4000), @p1='?' (DbType = DateTime2), @p2='?' (DbType = DateTime2), @p3='?' (Size = 4000), @p4='?' (Size = 4000), @p5='?' (Size = 4000), @p6='?' (Size = 4000), @p7='?' (Size = 4000), @p8='?' (Size = 4000), @p9='?' (DbType = Int32),

@p10='?' (Size = 4000), @p11='?' (DbType = DateTime2), @p12='?' (DbType = DateTime2), @p13='?' (Size = 4000), @p14='?' (Size = 4000), @p15='?' (Size = 4000), @p16='?' (Size = 4000), @p17='?' (Size = 4000), @p18='?' (Size = 4000), @p19='?' (DbType = Int32),

…

, @p190='?' (Size = 4000), @p191='?' (DbType = DateTime2), @p192='?' (DbType = DateTime2), @p193='?' (Size = 4000), @p194='?' (Size = 4000), @p195='?' (Size = 4000), @p196='?' (Size = 4000), @p197='?' (Size = 4000), @p198='?' (Size = 4000), @p199='?' (DbType = Int32)], CommandType='Text', CommandTimeout='30']

SET NOCOUNT ON;

DECLARE @inserted0 TABLE ([ID] int, [_Position] [int]);

MERGE [Books] USING (

VALUES (@p0, @p1, @p2, @p3, @p4, @p5, @p6, @p7, @p8, @p9, 0),

(@p10, @p11, @p12, @p13, @p14, @p15, @p16, @p17, @p18, @p19, 1),

…

(@p190, @p191, @p192, @p193, @p194, @p195, @p196, @p197, @p198, @p199, 19)) AS i ([Author], [DateCreated], [DateUpdated], [Edition], [Genre], [ISBN], [Location], [MediaType], [Title], [YearPublished], _Position) ON 1=0

WHEN NOT MATCHED THEN

INSERT ([Author], [DateCreated], [DateUpdated], [Edition], [Genre], [ISBN], [Location], [MediaType], [Title], [YearPublished])

VALUES (i.[Author], i.[DateCreated], i.[DateUpdated], i.[Edition], i.[Genre], i.[ISBN], i.[Location], i.[MediaType], i.[Title], i.[YearPublished])

OUTPUT INSERTED.[ID], i._Position

INTO @inserted0;

SELECT [t].[ID] FROM [Books] t

INNER JOIN @inserted0 i ON ([t].[ID] = [i].[ID])

ORDER BY [i].[_Position];

Microsoft.AspNetCore.Mvc.StatusCodeResult: Information: Executing HttpStatusCodeResult, setting HTTP status code 200

Microsoft.AspNetCore.Mvc.Infrastructure.ControllerActionInvoker: Information: Executed action BookLoan.Controllers.BookController.BulkInsertBooks (BookLoan.Catalog.API) in 19780.8407ms

Microsoft.AspNetCore.Routing.EndpointMiddleware: Information: Executed endpoint 'BookLoan.Controllers.BookController.BulkInsertBooks (BookLoan.Catalog.API)'

Microsoft.AspNetCore.Hosting.Diagnostics: Information: Request finished in 19844.8002ms 200

Notice that the SQL MERGE command has been used to build up the command parameters and insert the records into the Books table with one statement:

MERGE [Books] USING (

VALUES (@p0, @p1, @p2, @p3, @p4, @p5, @p6, @p7, @p8, @p9, 0),

…

WHEN NOT MATCHED THEN

INSERT ([Author], [DateCreated], [DateUpdated], [Edition], [Genre], [ISBN], [Location], [MediaType], [Title], [YearPublished])

VALUES (i.[Author], i.[DateCreated], i.[DateUpdated], i.[Edition], i.[Genre], i.[ISBN], i.[Location], i.[MediaType], i.[Title], i.[YearPublished])

OUTPUT INSERTED.[ID], i._Position

INTO @inserted0;

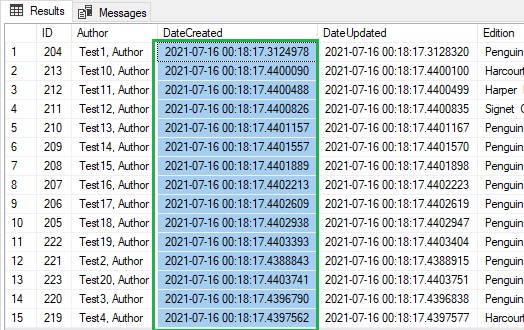

Checking the SQL table from SSMS, we see the date time fields have times that are quite close in interval:

By running a query on the date interval, we can see how the time span covers the batch query:

SELECT Date_Difference=DATEDIFF(

MILLISECOND, MIN([DateCreated]), MAX([DateCreated]))

FROM [aspnet-BookCatalog].[dbo].[Books]

WHERE [Id] >= [id of first new record]

In this case, the time span is 128ms.

Combined Inserts and Updates with Bulk Imports

In the next example, I will show how to apply a bulk import that combines inserts and updates into the same table.

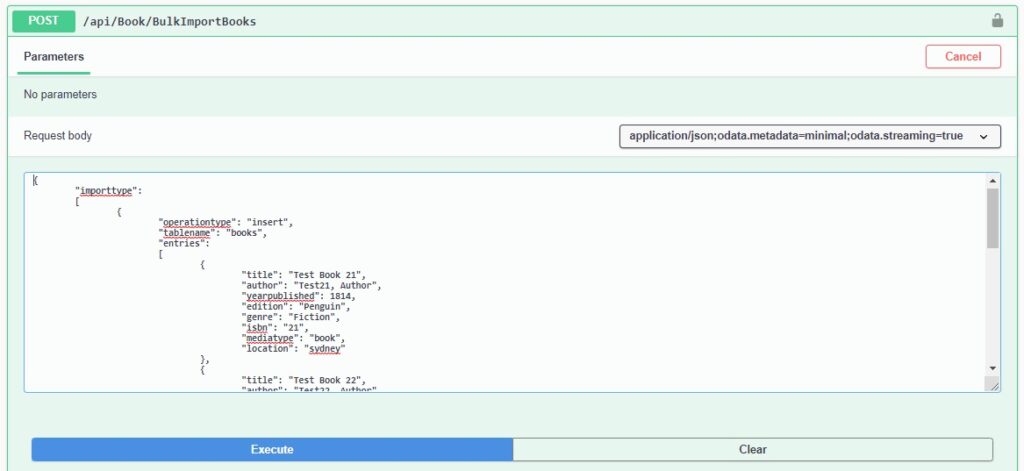

Suppose we have an input JSON that consists of a data structure that represents both inserts and updates for a table. The insert entries consist of records with all fields, with update entries consisting of a title and other non-empty or non-zero fields that will be updated for a record that matches the specified title field.

The input is shown below:

{

"importtype":

[

{

"operationtype": "insert",

"tablename": "books",

"entries":

[

{

"title": "Test Book 21",

"author": "Test21, Author",

"yearpublished": 1814,

"edition": "Penguin",

"genre": "Fiction",

"isbn": "21",

"mediatype": "book",

"location": "sydney"

},

{

"title": "Test Book 22",

"author": "Test22, Author",

"yearpublished": 1865,

"edition": "Penguin",

"genre": "Fiction",

"isbn": "22",

"mediatype": "book",

"location": "sydney"

}

]

},

{

"operationtype": "update",

"tablename": "books",

"entries":

[

{

"title": "Test Book 10",

"author": "",

"yearpublished": 0,

"edition": "",

"genre": "",

"isbn": "1011",

"mediatype": "",

"location": ""

},

{

"title": "Test Book 15",

"author": "",

"yearpublished": 0,

"edition": "",

"genre": "",

"isbn": "1511",

"mediatype": "",

"location": ""

}

]

}

]

}

To represent the above JSON data as model binder classes within ASP.NET Core, we declare the following classes, with the record entries generically typed:

public class BulkRecordImporterModel<T>

{

public RecordImportHeader<T>[] importType { get; set; }

}

public class RecordImportHeader<T>

{

public string operationType { get; set; }

public string tableName { get; set; }

public T[] entries { get; set; }

}

The controller API method is then implemented with the input parameter cast to the type:

BulkRecordImporterModel<BookViewModel>

The API method is shown below:

[HttpPost("api/[controller]/BulkImportBooks")]

public async Task<ActionResult> BulkImportBooks(

[FromBody] BulkRecordImporterModel<BookViewModel> model)

{

try

{

await _bookService.BulkImportBooks(model);

return Ok();

}

catch (Exception ex)

{

if (ex.InnerException != null)

return BadRequest($"Cannot import book records: {ex.Message},

inner exception: {ex.InnerException.Message}");

return BadRequest($"Cannot import book records: {ex.Message}");

}

}

The service method, BulkImportBooks() that implements the INSERT and UPDATE operations through the Entity Framework Core data context abstracted with a repository is shown below:

public async Task BulkImportBooks(BulkRecordImporterModel<BookViewModel>

importedData)

{

var saveChanges = false;

foreach (var importheader in importedData.importType)

{

if (importheader.operationType == "insert")

{

if (importheader.tableName == "books")

{

foreach (var entry in importheader.entries)

{

saveChanges = true;

entry.DateCreated = DateTime.Now;

entry.DateUpdated = DateTime.Now;

await _bookRepository.AddAsync(entry);

}

}

}

if (importheader.operationType == "update")

{

if (importheader.tableName == "books")

{

foreach (var entry in importheader.entries)

{

var book = await _bookRepository

.GetByTitle(entry.Title);

if (book != null)

{

saveChanges = true;

book.DateUpdated = DateTime.Now;

if (entry.Author.Length > 0)

book.Author = entry.Author;

if (entry.Genre.Length > 0)

book.Genre = entry.Genre;

if (entry.Edition.Length > 0)

book.Edition = entry.Edition;

if (entry.ISBN.Length > 0)

book.ISBN = entry.ISBN;

if (entry.Location.Length > 0)

book.Location = entry.Location;

if (entry.MediaType.Length > 0)

book.MediaType = entry.MediaType;

if (entry.YearPublished > 0)

book.YearPublished = entry.YearPublished;

await _bookRepository.UpdateAsync(book);

}

}

}

}

}

if (saveChanges)

await _bookRepository.UnitOfWork.SaveChangesAsync();

}

What we do is for each insert, we apply the data context insertion with:

await _bookRepository.AddAsync(entry);

And for each update, we apply the data context update with:

await _bookRepository.UpdateAsync(book);

At the end of the iterations and changes to the data context, we apply the changes with:

await _bookRepository.UnitOfWork.SaveChangesAsync();

The input is run through a Swagger interface for the API method:

The corresponding console output for the SQL queries is shown below:

Microsoft.EntityFrameworkCore.Infrastructure: Information: Entity Framework Core 3.1.0 initialized 'ApplicationDbContext' using provider 'Microsoft.EntityFrameworkCore.SqlServer' with options: MaxBatchSize=10

Microsoft.EntityFrameworkCore.Database.Command: Information: Executed DbCommand (246ms) [Parameters=[@__title_0='?' (Size = 4000)], CommandType='Text', CommandTimeout='30']

SELECT TOP(2) [b].[ID], [b].[Author], [b].[DateCreated], [b].[DateUpdated], [b].[Edition], [b].[Genre], [b].[ISBN], [b].[Location], [b].[MediaType], [b].[Title], [b].[YearPublished]

FROM [Books] AS [b]

WHERE [b].[Title] = @__title_0

Microsoft.EntityFrameworkCore.Database.Command: Information: Executed DbCommand (80ms) [Parameters=[@__title_0='?' (Size = 4000)], CommandType='Text', CommandTimeout='30']

SELECT TOP(2) [b].[ID], [b].[Author], [b].[DateCreated], [b].[DateUpdated], [b].[Edition], [b].[Genre], [b].[ISBN], [b].[Location], [b].[MediaType], [b].[Title], [b].[YearPublished]

FROM [Books] AS [b]

WHERE [b].[Title] = @__title_0

Microsoft.EntityFrameworkCore.Database.Command: Information: Executed DbCommand (516ms)

[Parameters=[

@p10='?' (DbType = Int32), @p0='?' (Size = 4000), @p1='?' (DbType = DateTime2),

@p2='?' (DbType = DateTime2), @p3='?' (Size = 4000), @p4='?' (Size = 4000),

@p5='?' (Size = 4000), @p6='?' (Size = 4000), @p7='?' (Size = 4000), @p8='?' (Size = 4000),

@p9='?' (DbType = Int32),

@p21='?' (DbType = Int32), @p11='?' (Size = 4000), @p12='?' (DbType = DateTime2),

@p13='?' (DbType = DateTime2), @p14='?' (Size = 4000), @p15='?' (Size = 4000),

@p16='?' (Size = 4000), @p17='?' (Size = 4000), @p18='?' (Size = 4000), @p19='?' (Size = 4000), @p20='?' (DbType = Int32),

@p22='?' (Size = 4000), @p23='?' (DbType = DateTime2), @p24='?' (DbType = DateTime2),

@p25='?' (Size = 4000), @p26='?' (Size = 4000), @p27='?' (Size = 4000), @p28='?' (Size = 4000), @p29='?' (Size = 4000), @p30='?' (Size = 4000), @p31='?' (DbType = Int32), @p32='?' (Size = 4000), @p33='?' (DbType = DateTime2), @p34='?' (DbType = DateTime2), @p35='?' (Size = 4000),

@p36='?' (Size = 4000), @p37='?' (Size = 4000), @p38='?' (Size = 4000), @p39='?' (Size = 4000), @p40='?' (Size = 4000), @p41='?' (DbType = Int32)], CommandType='Text', CommandTimeout='30']

SET NOCOUNT ON;

UPDATE [Books] SET [Author] = @p0, [DateCreated] = @p1, [DateUpdated] = @p2, [Edition] = @p3, [Genre] = @p4, [ISBN] = @p5, [Location] = @p6, [MediaType] = @p7, [Title] = @p8, [YearPublished] = @p9

WHERE [ID] = @p10;

SELECT @@ROWCOUNT;

UPDATE [Books] SET [Author] = @p11, [DateCreated] = @p12, [DateUpdated] = @p13, [Edition] = @p14, [Genre] = @p15, [ISBN] = @p16, [Location] = @p17, [MediaType] = @p18, [Title] = @p19, [YearPublished] = @p20

WHERE [ID] = @p21;

SELECT @@ROWCOUNT;

DECLARE @inserted2 TABLE ([ID] int, [_Position] [int]);

MERGE [Books] USING (

VALUES (@p22, @p23, @p24, @p25, @p26, @p27, @p28, @p29, @p30, @p31, 0),

(@p32, @p33, @p34, @p35, @p36, @p37, @p38, @p39, @p40, @p41, 1)) AS i ([Author], [DateCreated], [DateUpdated], [Edition], [Genre], [ISBN], [Location], [MediaType], [Title], [YearPublished], _Position) ON 1=0

WHEN NOT MATCHED THEN

INSERT ([Author], [DateCreated], [DateUpdated], [Edition], [Genre], [ISBN], [Location], [MediaType], [Title], [YearPublished])

VALUES (i.[Author], i.[DateCreated], i.[DateUpdated], i.[Edition], i.[Genre], i.[ISBN], i.[Location], i.[MediaType], i.[Title], i.[YearPublished])

OUTPUT INSERTED.[ID], i._Position

INTO @inserted2;

SELECT [t].[ID] FROM [Books] t

INNER JOIN @inserted2 i ON ([t].[ID] = [i].[ID])

ORDER BY [i].[_Position];

Microsoft.AspNetCore.Mvc.StatusCodeResult: Information: Executing HttpStatusCodeResult, setting HTTP status code 200

Microsoft.AspNetCore.Mvc.Infrastructure.ControllerActionInvoker: Information: Executed action BookLoan.Controllers.BookController.BulkImportBooks (BookLoan.Catalog.API) in 61216.7437ms

Microsoft.AspNetCore.Routing.EndpointMiddleware: Information: Executed endpoint 'BookLoan.Controllers.BookController.BulkImportBooks (BookLoan.Catalog.API)'

Microsoft.AspNetCore.Hosting.Diagnostics: Information: Request finished in 62718.6954ms 200

The SQL updates are applied before the SQL MERGE USING … INSERT statement.

All the above statements are applied on the server, making it an efficient execution.

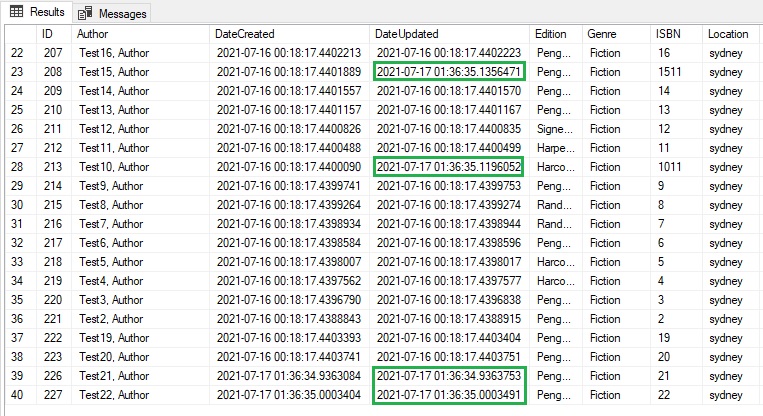

The resulting updates on the table data are shown below:

To determine the performance, I run a SQL query to obtain the duration of the INSERT and UPDATE batches:

SELECT MIN([DateCreated])

FROM [aspnet-BookCatalog].[dbo].[Books]

WHERE [Id] >= 224

UNION

SELECT MAX([DateUpdated])

FROM [aspnet-BookCatalog].[dbo].[Books]

WHERE [Title] IN ('Test Book 10', 'Test Book 15')

The durations turn out to be:

2021-07-17 01:36:34.9363084

2021-07-17 01:36:35.1356471

The duration is under 200ms, which shows that there is just one request to the server for the entire batch (inserts and updates).

When we are configuring the connection string in the start up, we can also set the minimum and maximum batch sizes so that large record sets that are bulk inserted or updated are split up into multiple commits as shown:

services.AddDbContext<ApplicationDbContext>(options =>

{

options.UseSqlServer(

Configuration.GetConnectionString(connStr),

opts =>

{

opts.MinBatchSize(1);

opts.MaxBatchSize(10);

}

);

});

We have seen how to apply a bulk insert of records and a mixed bulk update with inserts and updates. Both operations have involved one request to the server instead of multiple commits which require additional round trips to and from the backend SQL server.

The batch imports and updates are most useful when used in the following situations:

- Importing or updating records into a database.

- Pushing data changes from a user interface into a database.

- Offline user interface that is pushing changes from a disconnected data form.

That is all for today’s post.

I hope you found this post useful and informative.

Andrew Halil is a blogger, author and software developer with expertise of many areas in the information technology industry including full-stack web and native cloud based development, test driven development and Devops.